The gap between trying AI and trusting it comes down to one thing: Does it strengthen or break the instructional system teachers already use?

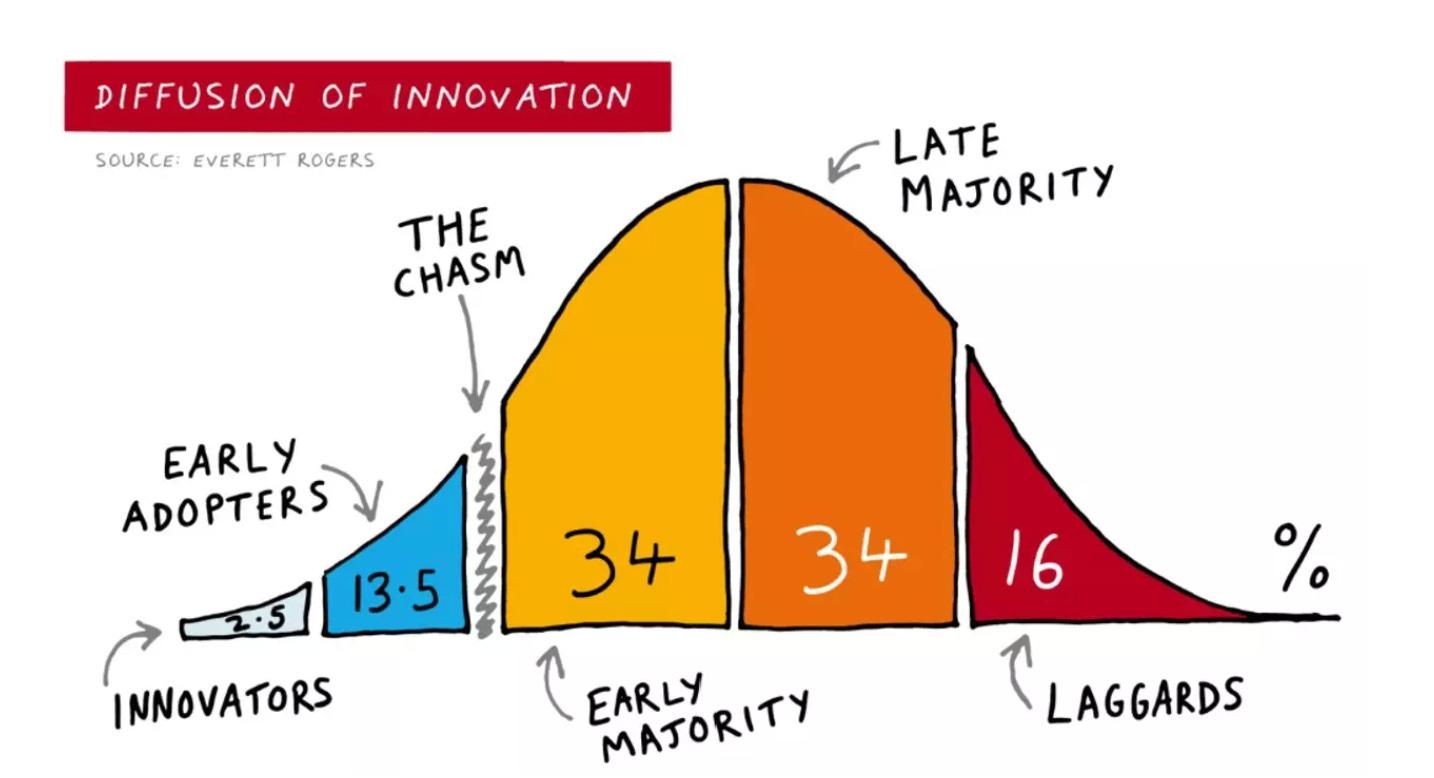

A pattern is emerging in how teachers adopt AI tools. According to Rogers’ Diffusion of Innovation framework, any new technology spreads through five distinct groups: innovators (2.5%), early adopters (13.5%), early majority (34%), late majority (34%), and laggards (16%).

Right now, AI in education finds itself at a critical juncture. Innovators (the risk-takers who experiment with new tools) are using AI enthusiastically, often building workarounds for limitations and compensating for misalignment through their own expertise. Early adopters (the respected opinion leaders) are selectively integrating AI into specific parts of their practice.

But the early majority (the pragmatic 34% who determine whether innovation actually scales) are making different calculations. They’re asking “Does this fit how I actually teach?”

The difference isn’t about features or capabilities. It’s about compatibility with the instructional system teachers already use.

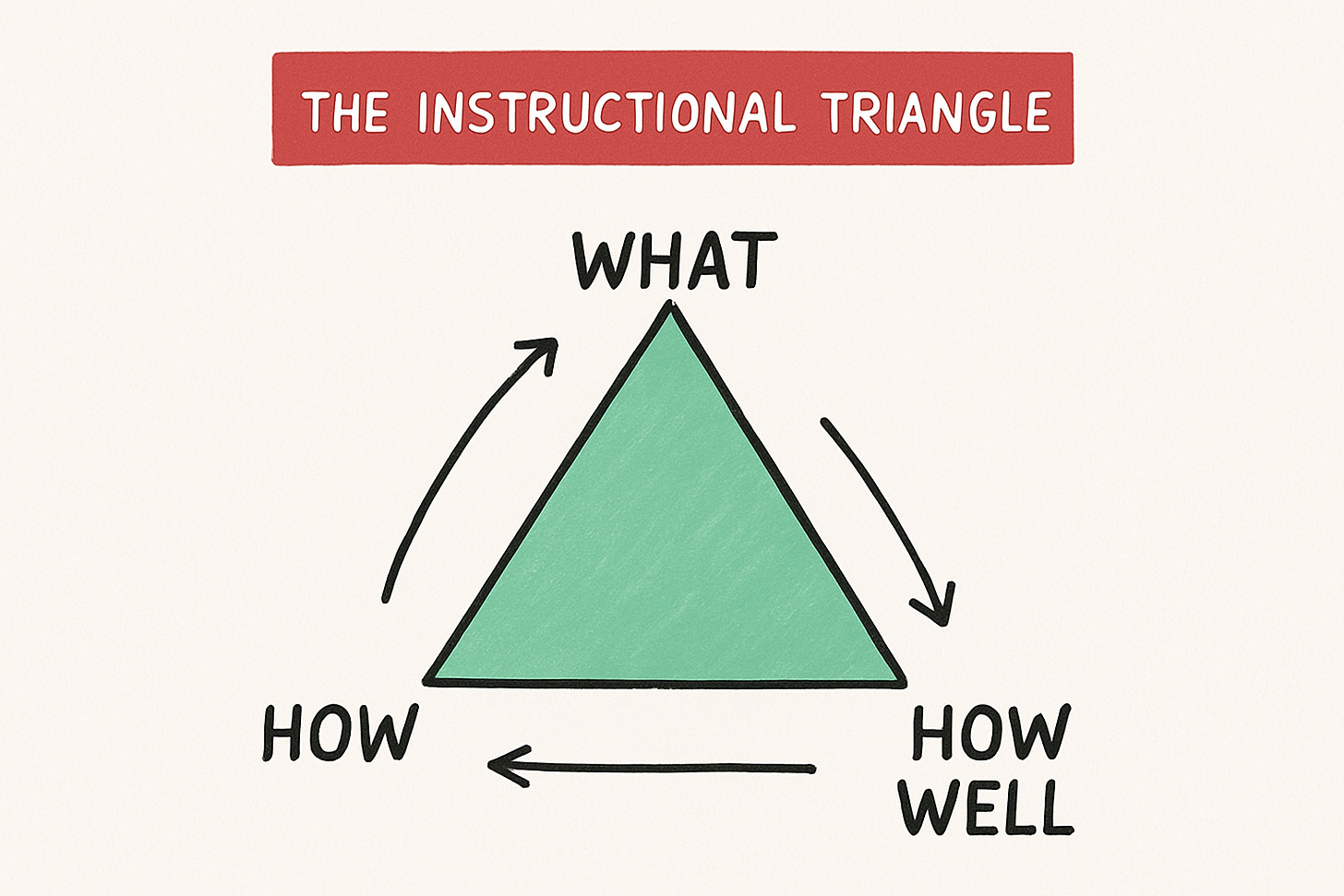

The Instructional Triangle (System)

Early majority teachers operate within these parameters:

District-adopted curriculum maps they must follow

Pacing guides that define when standards get taught

Common formative assessments created by grade-level teams

Data meetings where they explain student progress toward specific objectives

To be clear, these are NOT obstacles to work around. They’re absolutely the proven instructional system that AI needs to support.

That system is built on the Curriculum Coherence Triangle (or The Instructional Triangle) the alignment between three elements that must connect in a specific order:

1. WHAT students need to learn (standards, learning objectives)

2. HOW WELL they’re learning it (assessment, evidence)

3. HOW to teach it (instructional strategies, methods)

This sequence—starting with learning goals, defining success criteria, then designing instruction—is the structure of backward design (Wiggins & McTighe, 1998), the foundation of Marzano’s guaranteed and viable curriculum research, and the basis for every state’s standards-based instruction framework.

When these three points align, teaching becomes systematic. When they don’t, everything becomes harder.

And this is where understanding the triangle becomes critical for AI adoption.

The Illusion of Alignment: A Real Example

The obvious question: Can’t teachers just prompt AI to ensure alignment?

For example: “Create a lesson on photosynthesis aligned to NGSS MS-LS1-6.”

To test this, I entered this prompt into ChatGPT. Here’s what happened:

What ChatGPT got right?

Correctly cited standard MS-LS1-6

Included three-dimensional learning (SEP, DCI, CCC)

Used NGSS language (phenomena, CER framework)

Featured hands-on investigation with Elodea plants

Mentioned photosynthesis, matter cycling, and energy flow

On the surface, this might seem aligned. The lesson has all the right keywords, follows NGSS structure, and would probably get incorporated by a quick skim by a hurried teacher.

But here’s what the standard actually requires:

MS-LS1-6: “Construct a scientific explanation based on evidence for the role of photosynthesis in the cycling of matter and flow of energy into and out of organisms.”

The key phrase: “into and out of organisms” (plural). This demands systems-level thinking—understanding how energy and matter flow between producers, consumers, and decomposers in an ecosystem.

What the AI lesson actually taught:

What happens inside a plant during photosynthesis (organism-level)

The chemical equation (molecular-level)

Observation of oxygen bubbles from Elodea (single organism)

What the AI assessment actually measured:

“How does energy enter ecosystems?” (Recall)

“How does matter cycle during photosynthesis?” (Recall)

“Why is photosynthesis essential for life?” (Recall)

The triangle was broken in three places:

WHAT (Standard): Construct explanation for photosynthesis’s role in cycling matter and flowing energy between organisms (systems-level, construction skill, SEP: Constructing Explanations)

HOW WELL (Assessment): Answer recall questions about the photosynthesis process (organism-level, recall skill, Remember/Understand on Bloom’s)

HOW (Instruction): Teacher explains connections while students observe single organism (teacher-led explanation, organism-level observation)

All three elements mentioned photosynthesis. None aligned in cognitive demand, scope, or skill development.

The problem isn’t surface features, it’s relational properties.

ChatGPT matched:

Topic (photosynthesis)

Keywords (matter, energy, cycling)

Structure (NGSS format, CER framework)

Engagement (hands-on investigation)

ChatGPT missed:

Cognitive demand alignment: Standard requires construction, assessment measures recall

Scope alignment: Standard addresses systems, instruction focuses on single organisms

Skill development: Instruction provides teacher-led explanation, assessment expects student-constructed explanation

Phenomenon coherence: Anchoring question about rainforest ecosystems, investigation examines one plant in isolation

A teacher using this lesson would teach a perfectly competent introduction to photosynthesis, but students would likely struggle on the standards-aligned unit assessment.

This is the illusion of alignment. It looks right, sounds right, and passes superficial review. But it prepares students for a different kind of thinking than the standard requires.

Why “Just Prompting Better” Doesn’t Solve This

Here’s the question I get most often: “Can’t we just write better prompts?”

Yes—and more specific prompts do help. But here’s what matters for adoption: Teachers need to see aligned outputs without having to diagnose and correct misalignment themselves.

Look at the photosynthesis example. To get a truly aligned lesson, a teacher would need to prompt something like:

“Don’t focus on what happens inside one plant. I need instruction that traces how energy flows between multiple organisms in an ecosystem. The assessment should measure students’ ability to construct a systems-level explanation using evidence, not recall the chemical equation. And the instruction needs to move students from watching me construct an explanation, to constructing one together with support, to constructing one independently—because that’s what the assessment will ask them to do alone.”

That prompt would work—but it requires already knowing that the original output was misaligned at the level of scope (organism vs. systems), cognitive demand (recall vs. construction), and instructional scaffolding (modeling vs. gradual release).

For AI to cross the adoption chasm from early adopters to early majority, teachers need to trust the outputs are aligned by default—not spend time debugging them.

The most promising AI tools build alignment awareness into how they generate content. When a teacher requests materials for MS-LS1-6, the AI should automatically:

Recognize this requires systems-level thinking (not organism-level)

Know this is a “construct explanation” standard (not recall)

Generate instruction that scaffolds explanation-construction progressively

Create assessments that measure the same cognitive demand instruction develops

This is what separates AI tools teachers try once from tools they integrate into practice: This is why prompting alone can’t solve the problem. The AI needs to be designed with alignment awareness built in, not dependent on users to specify every dimension of coherence.

Consider what actually needs to align:

Vertical Alignment: Standards exist in learning progressions across grade levels. Math standards build fraction understanding deliberately:

Grade 3: Understand fractions as parts of a whole

Grade 4: Understand fractions as multiples of unit fractions

Grade 5: Apply that understanding to multiply fractions

An AI generating “a lesson on multiplying fractions” for grade 5 might create procedural instruction (multiply numerators, multiply denominators) that works in isolation but breaks the conceptual through-line. The standard says “apply and extend previous understandings,” but which specific understanding from grade 4? An AI can’t know without understanding the progression.

Cognitive Demand Alignment: Webb’s Depth of Knowledge framework distinguishes between categorical alignment (same topic) and depth alignment (same cognitive complexity).

A lesson on theme in literature and an assessment on theme might be categorically aligned while cognitively misaligned:

Instruction: Identify theme with sentence starters (DOK 1-2: Recall/Skill)

Assessment: Evaluate whether author’s development of theme is effective and justify with evidence (DOK 3-4: Strategic Thinking/Extended Thinking)

The instruction develops identification. The assessment requires evaluation and justification. Students received no practice in the evaluative thinking the assessment demands.

Assessment-Instruction Alignment: An 8th grade teacher requests materials for the math standard: “Solve linear equations in one variable.”

AI generates:

Lesson on two-step equations with worked examples

Practice: 3x + 5 = 14, 2(x - 3) = 10

Assessment with similar problems

This looks aligned. But the standard specifically emphasizes equations with variables on both sides and cases with infinite or no solutions. The AI generated practice for a prerequisite skill that appears in the learning progression but isn’t the target standard. Students could master the practice and still fail a standards-aligned assessment asking them to solve 3x + 7 = 2x + 15 or recognize that 2(x + 3) = 2x + 6 has infinite solutions.

These misalignments are invisible if you only check whether the same keywords appear in all three places.

Research by Andrew Porter on alignment shows that what matters is the intersection of content and cognitive demand, not just whether the same topic appears in standards, instruction, and assessment. His surveys reveals that teachers might spend 40% of time on a topic representing 10% of assessed standards, or assessments might emphasize application while instruction emphasizes recall.

What Gets Teachers From Trying to Trusting

Rogers’ Diffusion of Innovation framework identifies five characteristics that determine whether innovations spread: relative advantage, compatibility, complexity, trialability, and observability.

For AI tools in education, compatibility matters most—specifically, compatibility with the Curriculum Coherence Triangle.

Three design principles separate AI tools teachers try once from tools they integrate into practice:

Principle 1: Standards-Grounded Generation That Understands Progressions

Effective AI tools don’t just match keywords to standards. They understand standards as nodes in learning progressions.

When a teacher requests materials for a 5th grade fraction standard, the AI should surface:

“This standard builds on grade 4 understanding of fractions as multiples of unit fractions”

“Students should already be able to [prerequisite skills]”

“This prepares students for grade 6 work on [next steps in progression]”

“Common misconceptions at this level include [research-based challenges]”

This context prevents the procedural-only instruction that breaks conceptual through-lines.

Some emerging platforms require teachers to indicate where students are in progressions, not just which standard they’re addressing today. This shifts AI from “content generator” to “progression-aware instructional support.”

Principle 2: Visible Coherence Across Cognitive Demand

When AI generates instructional materials, it should make alignment structure visible:

Instead of:

“Here’s a lesson on analyzing author’s purpose.”

More useful:

"This lesson develops students’ ability to ANALYZE (Bloom’s: Analyze) how specific word choices create tone and reveal purpose. The instruction includes:

Modeling analysis of word choice → tone → purpose connections (teacher demonstrates the thinking)

Guided practice comparing two texts with different purposes (scaffolded application)

Independent analysis with justification (transfer to new context)*

The formative assessment asks students to ANALYZE a new text, identify word choices that reveal purpose, and EXPLAIN their reasoning (same cognitive demand as instruction developed).

⚠️ Note: If your summative assessment requires students to EVALUATE the effectiveness of author’s choices, students will need additional instruction in evaluation criteria beyond what this lesson provides.”

This makes the triangle visible and reveals potential mismatches before they create learning gaps.

Research on teacher decision-making shows that teachers evaluate instructional materials based on alignment to objectives, appropriateness for students, and assessment connection (Remillard, 2005). Making these connections explicit in AI outputs reduces the cognitive work teachers must do to verify coherence.

Principle 3: Evidence-Responsive Adaptation That Maintains Instructional Focus

There’s a difference between showing teachers data and helping teachers use evidence to adjust instruction.

Consider formative assessment data showing students can solve 2x + 5 = 13 but struggle with 3x + 7 = 2x + 15 (variables on both sides).

“Generic AI response:

Students are struggling. Try:

More practice problems

Different manipulatives

Peer tutoring

Video tutorials”

These suggestions don’t address the specific learning gap.

Evidence-responsive AI:

“These students have mastered two-step equations (prerequisite skill verified). The gap is specific to equations with variables on both sides. This requires understanding that we can isolate the variable by moving like terms—a conceptual leap beyond procedural steps.

Instructional adjustments that maintain focus on standard 8.EE.C.7:

Bridge from mastery: Start with what they can do (2x + 5 = 13), then show what changes when variables appear on both sides (x + 5 = 2x)

Conceptual emphasis: Before procedural steps, ensure students understand WHY we move variable terms (to collect like terms) not just HOW

Graduated complexity: Progress from x + 5 = 2x → 2x + 3 = x + 7 → 3x + 7 = 2x + 15

Metacognitive check: Can students explain their reasoning, not just execute steps?

This maintains alignment to your target standard while addressing the evidence-revealed gap.”

The first response treats all struggles as equivalent. The second diagnoses the specific gap, connects it to the learning progression, and suggests adjustments that maintain instructional coherence.

What This Reveals About Instructional Coherence

The Curriculum Coherence Triangle isn’t just a framework for designing better AI. It’s a lens for understanding what makes teaching systematically effective.

Marzano’s meta-analysis identified guaranteed and viable curriculum as the school-level factor most strongly correlated with student achievement. “Guaranteed” means specific content is taught regardless of which teacher a student has. “Viable” means there’s adequate time to teach that content.

Both require alignment. If what teachers teach (HOW) doesn’t match what students are assessed on (HOW WELL), the curriculum isn’t guaranteed. If AI generates content that expands beyond agreed-upon standards (WHAT) or introduces scope creep, the curriculum isn’t viable.

Research on Professional Learning Communities shows that the most productive teacher collaboration starts with “What’s the essential learning?” (WHAT), then “How will we know students learned it?” (HOW WELL), then “What instructional approaches work best?” (HOW) (DuFour et al., 2016).

Teams that skip steps or start in the wrong order spend time without improving outcomes. AI tools that ignore this sequence create the same problem at scale—generating content that looks aligned while breaking the relational coherence that makes curriculum effective.

The Design Challenge Ahead

AI can generate impressive content. The question is whether it can strengthen the instructional systems that help all students learn.

That requires understanding not just what teachers do, but why they do it in that specific order:

Standards before assessment (ensuring WHAT aligns to HOW WELL in both content and cognitive demand)

Assessment before instruction (ensuring HOW WELL aligns to HOW in skill development)

Evidence informing adjustment (maintaining the triangle while responding to data)

The most promising AI tools don’t try to replace this system. They try to make it work better by:

Understanding standards as progressions, not isolated skills

Making cognitive demand alignment visible across instruction and assessment

Distinguishing between surface-level keyword matching and deep relational coherence

Suggesting evidence-based adjustments that maintain instructional focus

These capabilities support adoption not because they’re technologically impressive, but because they’re compatible with how curriculum and instruction actually work.

Rogers’ research shows that innovations spread when they fit existing systems rather than require wholesale replacement. For AI in education, that means respecting the Curriculum Coherence Triangle—the alignment between learning objectives, assessment evidence, and instructional strategies that holds effective teaching together.

The triangle has structured systematic instruction for decades. AI that strengthens it will scale. AI that creates the illusion of alignment while breaking relational coherence will remain at the margins, used by innovators who can compensate for misalignment but never crossing the chasm to early majority adoption.

The question for AI builders is “Can we create tech that strengthens the proven and aligned instructional systems teachers already use?”

That’s the difference between tools teachers try and tools teachers trust.

Never Stop Asking,

Nathan